Recently, I had the opportunity to speak at the Sydney SEO Conference in 2025. Over the past couple of years, I haven’t met many SEOs who have explored and understood bots and log files. So let’s dig in and hopefully, I can help!

Table of Contents

Have you heard of the Dead Internet Theory? It’s a conspiracy theory that the internet will one day mainly consist of bots and automated-generated content. Currently, it’s feeling less like a conspiracy theory and more like truth. If you’ve been on social media anytime lately you’ll have noticed an increase in bots and less real life.

Is Dead Internet Theory true yet?

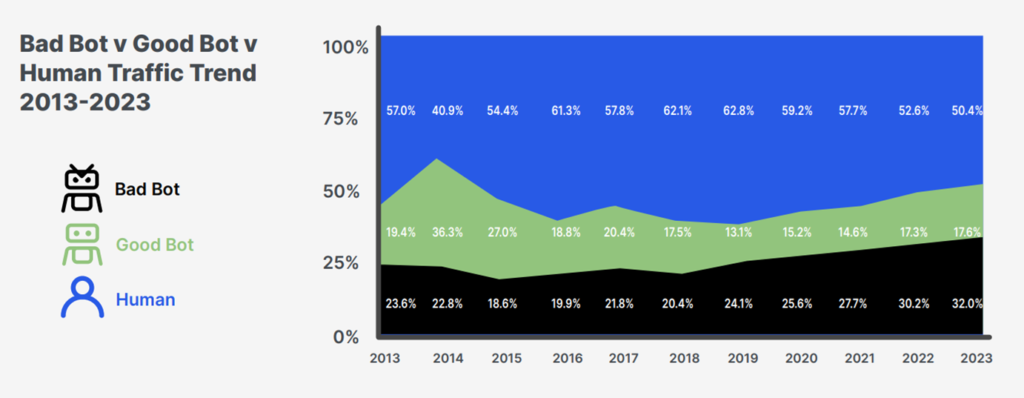

So how are we tracking? Imperva released a solid report called the Bad Bot Report which as of 2023 puts the Bots at 50% of traffic on the internet.

Good Bots – allow online businesses and products to be found by prospective customers, with examples including search engine crawlers like GoogleBot and Bingbot.

Bad Bots – web scraping, competitive data mining, personal and financial data harvesting, brute-force login, digital ad fraud, spam, transaction fraud, and more.

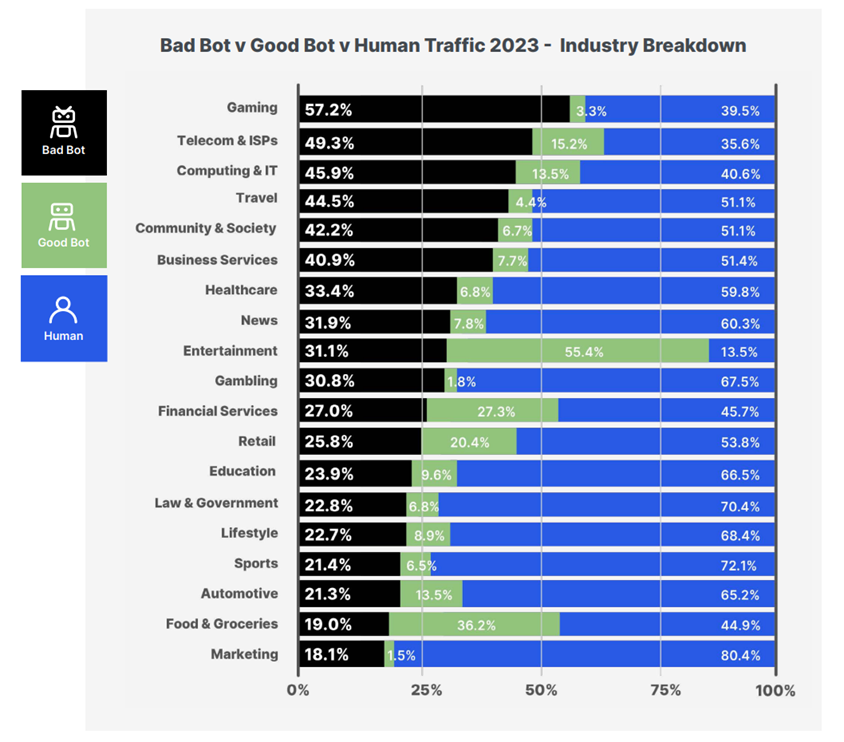

It’s important to remember this does vary by industry, with Gaming seeing the largest number of “bad bots”. Imperva’s report includes some fascinating numbers including cheating in gaming or seat spinning in the travel industry.

So what does this have to do with SEO and log files? I think SEOs love to pretend that we are optimising for the user. And for the most part, we are. We know from Google’s Anti-Trust trial they use click data to rank websites.

However, with the increase in Zero Click Searches, AI Overviews and the rise in more AI alternatives that we need to start keeping an eye on bots.

What are bots doing on my websites?

To help the Sydney SEO community understand log files and bots, I’ve used 2 of my hobby sites Dog Games and Safe Suburbs to illustrate what you can discover in log files.

Here are some statistics about them both to start us off for January.

| Website Details | Dog Games | Safe Suburbs |

|---|---|---|

| Website Type | Ecommerce | Programmatic |

| URLs (HTML, JS, CSS, Images) | 700 | 419 |

| HTML Pages | 121 | 372 |

| Monthly Users | 1,800 | 1,000 |

| Organic Audience | Dog enrichment toy keywords | Programmatic, long tail, crime statistic keywords |

| Number of Logs | 120,000 | 33,000 |

What is a log file?

Every time a user or bot (a client) loads your website whether it’s a page, image, javascript or CSS file they leave a trace in the form of a log. When we are talking about log files in the form of SEO, we are talking about access logs. These will usually be in an Apache or Nginx format.

A log file will look similar to below with a whole range of different user agents, pages, IPs and time stamps. We can use these files to find errors or information about what bots or users are experiencing on our websites.

66.249.68.68 - - [01/Feb/2025:04:57:22 +0000] "GET /slow-feeders/ HTTP/1.1" 200 26091 "-" "Mozilla/5.0 (compatible; Googlebot/2.1; +http://www.google.com/bot.html)" 40.77.167.1 - - [01/Feb/2025:06:17:50 +0000] "GET /slow-feeders/ HTTP/1.1" 301 306 "-" "Mozilla/5.0 AppleWebKit/537.36 (KHTML, like Gecko; compatible; bingbot/2.0; +http://www.bing.com/bingbot.htm) Chrome/116.0.1938.76 Safari/537.36" 38.18.17.226 - - [01/Feb/2025:06:23:27 +0000] "GET /slow-feeders/ HTTP/1.1" 200 172784 "-" "Mozilla/5.0 AppleWebKit/537.36 (KHTML, like Gecko; compatible; PerplexityBot/1.0; +https://perplexity.ai/perplexitybot)" 51.222.253.4 - - [01/Feb/2025:09:14:15 +0000] "GET /slow-feeders/ HTTP/1.0" 200 172503 "-" "Mozilla/5.0 (compatible; AhrefsBot/7.0; +http://ahrefs.com/robot/)" 72.14.201.175 - - [01/Feb/2025:15:18:14 +0000] "GET /slow-feeders/ HTTP/1.1" 200 172804 "https://www.google.com/" "Mozilla/5.0 (Linux; Android 10; K) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/132.0.0.0 Mobile Safari/537.36"

Where to find log files?

If you have an infrastructure or IT team, they can usually get them for you off your server or CDN such as Cloudflare. Make sure to ask for Access Logs not log files in general. As they would have a number of different logs such as error logs or even plugin logs.

If you are working in enterprise or on massive websites, there is a chance they are not storing access logs. This could be because for massive websites it can get very expensive to store them.

Finding log files on your own server

If you are hosting your own sites, you can usually find them through FTP or your server’s files. They are usually in a folder called logs. In some cases, they won’t be there in which case, reach out to your hosting company to ask if they can store them for you.

Honestly, finding your log files is the hardest part of log file analysis!

How to read an Apache or Nginx log

For the next part, we are going to look at what makes up a log in a log file. Let’s use this log as an example:

66.249.68.68 - - [01/Feb/2025:04:57:22 +0000] "GET /slow-feeders/ HTTP/1.1" 200 26091 "/dog-puzzles/" "Mozilla/5.0 (compatible; Googlebot/2.1; +http://www.google.com/bot.html)"

IP Address of the client: 66.249.68.68

Most people will be familiar with IP addresses. This is the IP of the client (bot or user) that has hit your website. This will vary and can be used to verify if the bot is who they say they are. Most “good” bots, will maintain the same consistent set of IP addresses.

In the example above, “66.249.68.68” is one of Google Bot’s which can be verified in their crawler lists.

Date & Time of the Request: [01/Feb/2025:04:57:22 +0000]

This one’s, pretty straightforward. The date and time that the client hit the URL. Make sure to check which timezone the server is logging in and convert to yours.

Request Type & URL Path: GET /slow-feeders/

This is where things start to get a bit more interesting. Here we can see what URL the client is hitting.

The GET part explains that the client is retrieving data from the server. You will also see POST in your log files which is when your server might be instead posting.^

The URL can include parameters which can be incredibly helpful to find issues. Below you can see Googlebot is regularly crawling URLs that include Add to Cart parameters. I need to fix this as it’s creating thousands of unique URLs. This error can also be found in Google Search Console.

66.249.65.203 - - [31/Dec/2024:23:29:07 +1100] "GET /brands/west-paw/rumbl-treat-dispensing-dog-toy/?add-to-cart=356 HTTP/1.1" 200 34402 "-" "Mozilla/5.0 (Linux; Android 6.0.1; Nexus 5X Build/MMB29P) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/131.0.6778.139 Mobile Safari/537.36 (compatible; Googlebot/2.1; +http://www.google.com/bot.html)"

Protocol: HTTP/1.1

This is the HTTP protocol the client used to retrieve the URL. In most cases, it will be HTTP/1.1. For those new to HTTP Protocols, it’s the method that the computer or server uses to request or send information.

You may be familiar with HTTP/2, when you’re looking at site speed issues. It’s faster and more efficient. In the case of log files, you’ll expect to see HTTP1.1. Back in the day, we used to be SPDY which is no longer available.^

Status Codes: 200, 3xx, 4xx, 5xx etc.

As an SEO, you should be familiar with this one! It’s the status code that the server has responded with for the client. This is where we can start finding those vital errors that need cleaning up.

301 Redirect Example

One example, of an error or insight you could find would be a 301 log for Google bot for an old page. I know that there are no internal links pointing to this URL and I redirected it back in May 2024.

Please keep your 301s in place. I’ve worked on many old websites and can tell you, Googlebot will continue to crawl them. There could be old backlinks out on the web you do not know about.

66.249.65.202 - - [05/Jan/2025:03:24:50 +1100] "GET /tug-toys/ HTTP/1.1" 301 0 "-" "Mozilla/5.0 (Linux; Android 6.0.1; Nexus 5X Build/MMB29P) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/131.0.6778.139 Mobile Safari/537.36 (compatible; Googlebot/2.1; +http://www.google.com/bot.html)"

404 Example

Below is an example of GPT hitting a 404. Googlebot and Bingbot are hitting this URL too.

Now this could be found by crawling your site with Screaming Frog or your preferred crawler. But sometimes a crawl will not pick up all 404 errors. Sometimes you may have an unlinked page that you forget about.

4.227.36.126 - - [02/Jan/2025:12:53:21 +1100] "GET /buster/ HTTP/1.1" 404 17792 "-" "Mozilla/5.0 AppleWebKit/537.36 (KHTML, like Gecko; compatible; GPTBot/1.2; +https://openai.com/gptbot)"

Total Bytes: 26091

Did you want yet another data point for site speed? The Total Bytes will tell you the size of the request. It can be a great way to discover URLs or code files that are too large.

HTTP Referrer: /dog-puzzles/

Some logs will include the URL path that referred them, however, it’s quite common that this isn’t included.

User Agent

You’ve then got the user agent, which will tell you who the log is about. Most of the time it will include a URL that you can find out more about the block and how to block them.

#Googlebot "Mozilla/5.0 (compatible; Googlebot/2.1; +http://www.google.com/bot.html)" #Bingbot "Mozilla/5.0 AppleWebKit/537.36 (KHTML, like Gecko; compatible; bingbot/2.0; +http://www.bing.com/bingbot.htm) Chrome/116.0.1938.76 Safari/537.36" #DuckDuckGo "https://duckduckgo.com/" "Mozilla/5.0 (Android 15; Mobile; rv:133.0) Gecko/133.0 Firefox/133.0" #Open AI's LLM Bot "Mozilla/5.0 AppleWebKit/537.36 (KHTML, like Gecko; compatible; GPTBot/1.2; +https://openai.com/gptbot)" #Open AI's Search Bot "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/131.0.0.0 Safari/537.36; compatible; OAI-SearchBot/1.0; +https://openai.com/searchbot" #Perplexity's Bot "Mozilla/5.0 AppleWebKit/537.36 (KHTML, like Gecko; compatible; PerplexityBot/1.0; +https://docs.perplexity.ai/docs/perplexity-bot)"

How to analyse this data?

For my analysis, I used Screaming Frog Log File Analyser and then built a custom solution with Python as well. You discover which pages Googlebot or other bots are crawling a lot or not enough and what errors you need to fix.

It’s really up to you to explore and dig into the data.

Are my websites dead yet?

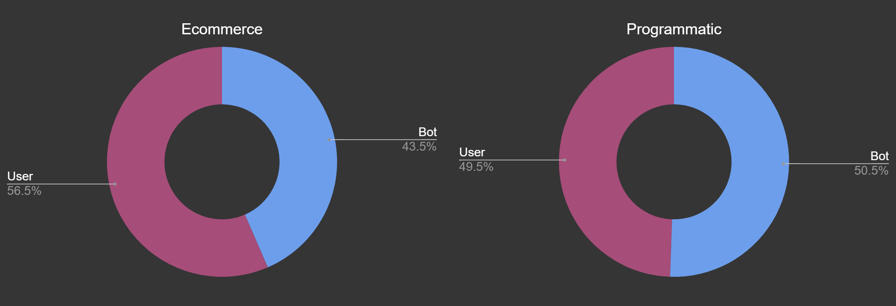

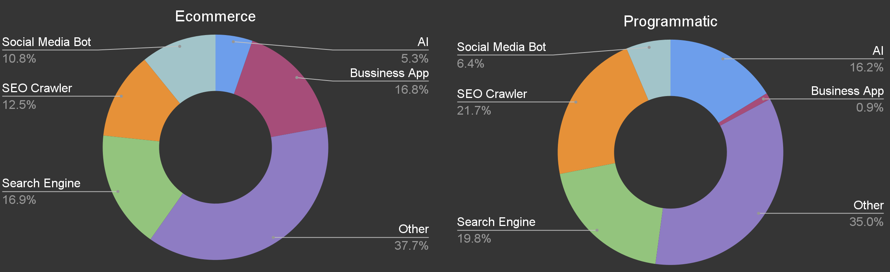

I’m happy to say that my websites still have users landing on them. However, the numbers are pretty close to Imperva’s report. I see more users than bots on my e-commerce site with 56% users vs 43.5% Bots. My programmatic is different with 49.5% users vs 50.5% bots, we should note that the site is quite out of date, but still ranks well.

Which bots are hitting my sites?

For this study, I’ve categorised the more common bots and left others under Other. There will always be a range of different bots hitting your site. For example “Business App” is 16.8% of bots on my e-commerce, this includes operational or CRM bots communicating with the website.

Search Engines vs AI

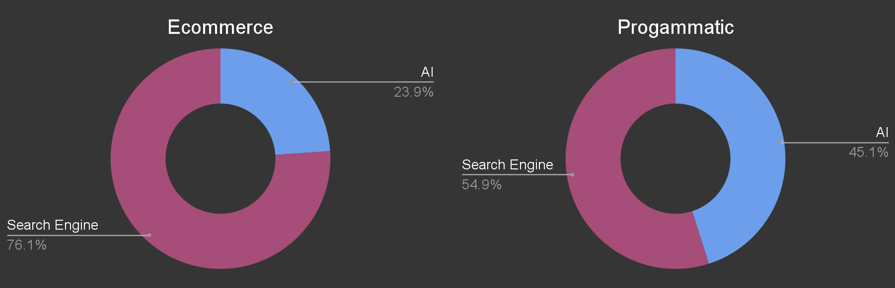

When we look at AI vs Search engines we see quite different numbers when comparing the two different types of bots. The E-commerce saw AI 23.9% vs Search Engine 76.1% which was very different to the programmatic with 54.9% search engine and 45.1% AI.

When I looked into which pages AI likes to hit, it has a preference for informational pages, which could be the reason we see more AI on the programmatic. The e-commerce only has 6 blog posts on it. I’m also curious to see if I update the data on the programmatic and whether this split would change.

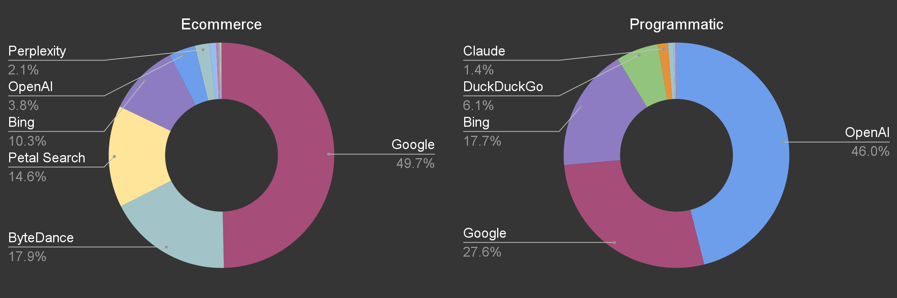

When we break it down we notice that both sites have very different bots hitting them. OpenAI seems obsessed with my programmatic with 46% of all hits vs only 3.8% on my E-commerce. Whereas my E-commerce has more Perplexity or Petal Search which is used for Huawei devices.

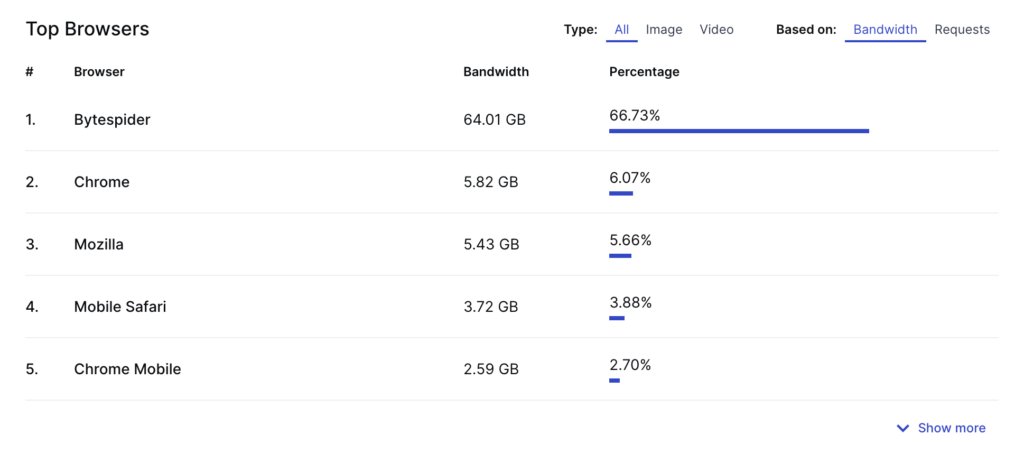

BtyeDance is 17.9% on my E-commerce which concerns me as it’s not entirely clear what ByteDance are doing with the data. For those that don’t know, ByteDance is the owner of TikTok. It’s said allegedly that this bot is training Doubao. There are rumours it could be used for Deepseek, however, I haven’t found any proof of this yet.

SEO Bots aren’t really helping

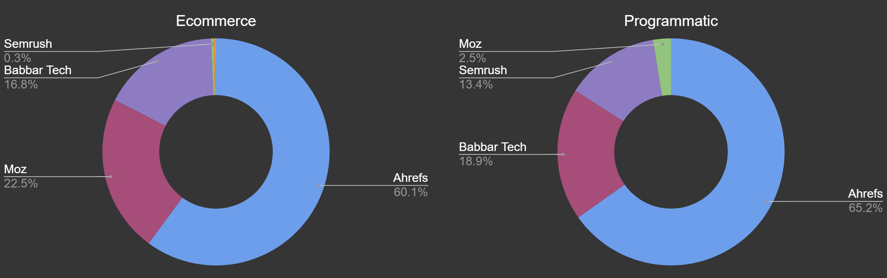

Then there’s the question of SEO Bots. Our industry is behind an awful lot of bandwidth usage, which isn’t fantastic for the environment either.

Ahref’s is a lot larger, however, it is worth mentioning this includes their search engine Yep and is listed on Cloudflare’s verified bots along with Moz and Semrush.

Can we even block AI & SEO Bots?

The good news is yes! You can, in your robots.txt or through your htaccess or CDN. Below’s an example of blocking through your robots.txt.

In the past, people have had issues with blocking perplexity or bytedance. So make sure to check back later that they are only hitting your robots.txt and not your disallowed pages.

Jes Scholz, explained to me earlier this week that there is also an llm.txt – which I shall have to go test! Let me know if you’ve ever used it before.

User-agent: Bytespider Disallow: / User-agent: ClaudeBot Disallow: / User-agent: GPTBot Disallow: / User-agent: PerplexityBot Disallow: / User-agent: AhrefsBot Disallow: / User-agent: Barkrowler Disallow: / User-agent: DotBot Disallow: / User-agent: SemrushBot Disallow: / User-agent: Screaming Frog Disallow: / User-agent: BLEXBot Disallow: / User-agent: BrightEdge Disallow: /

Blocking bots can save you money and bandwidth

Nerd Crawler, a comic book art website saved 60% of their bandwidth by blocking ByteDance! It used 60GB over 2 weeks. This can be massive, and if you want to impress your infrastructure team, they’ll be so happy with the savings to server costs.

Should we block bots?

I think we are too late. We missed our opportunity to stop Google and LLM’s from taking and repackaging our content. We should have stopped them when featured snippets came out.

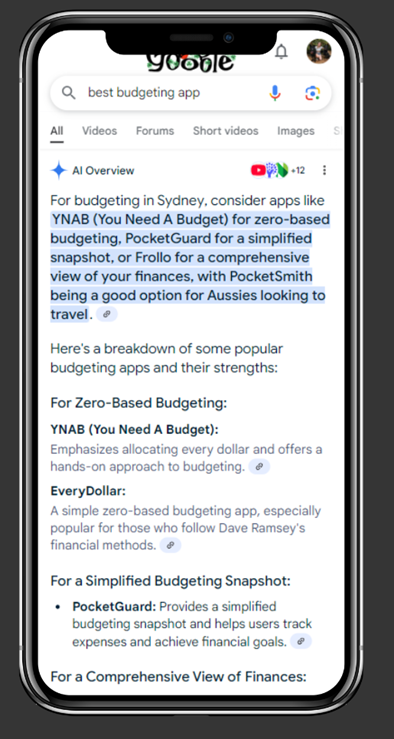

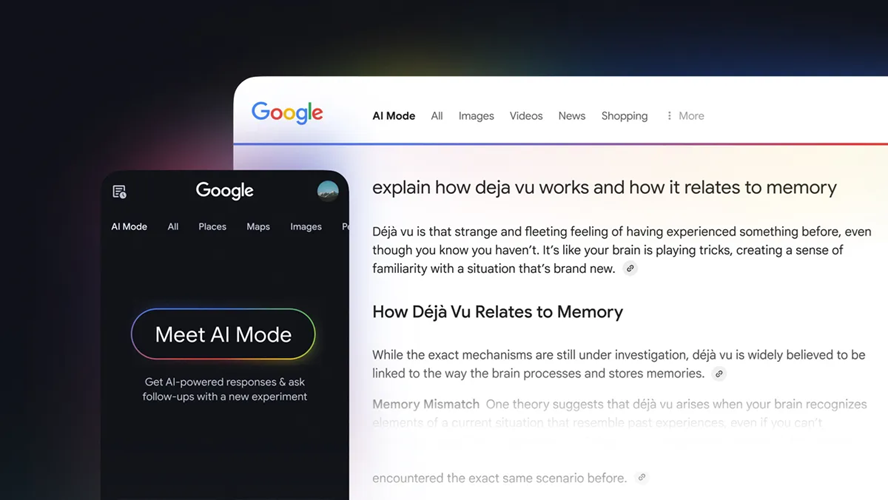

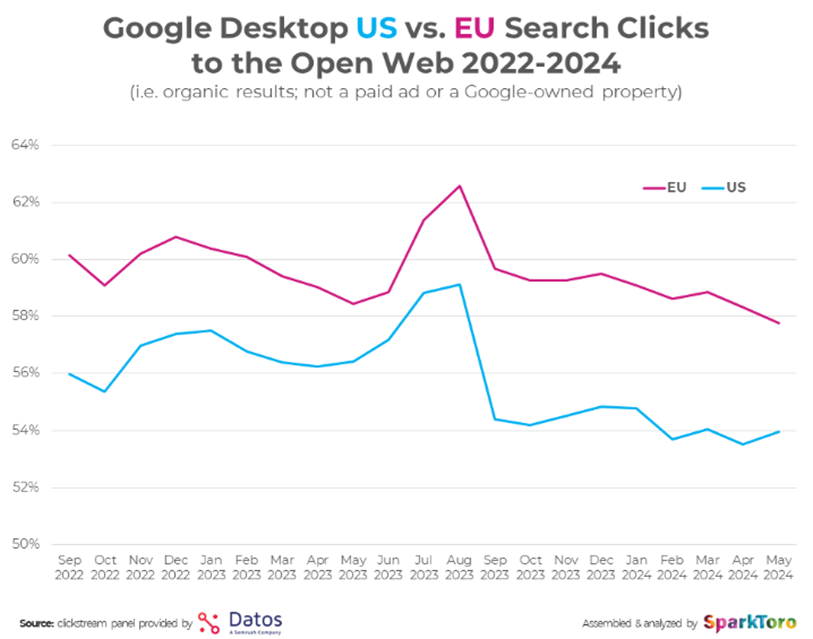

SparkToro has a great study on the number of clicks from Search with searches decreasing each month. I imagine this is only going to get worse with Google’s AI Overviews which we can’t currently opt out of as it’s treated as a search feature like a People Also Ask or Featured Snippet.

The EU is leading the charge with protecting content and you can see below the impact of GDPR when comparing the USA. I imagine Australia, would be very similar.

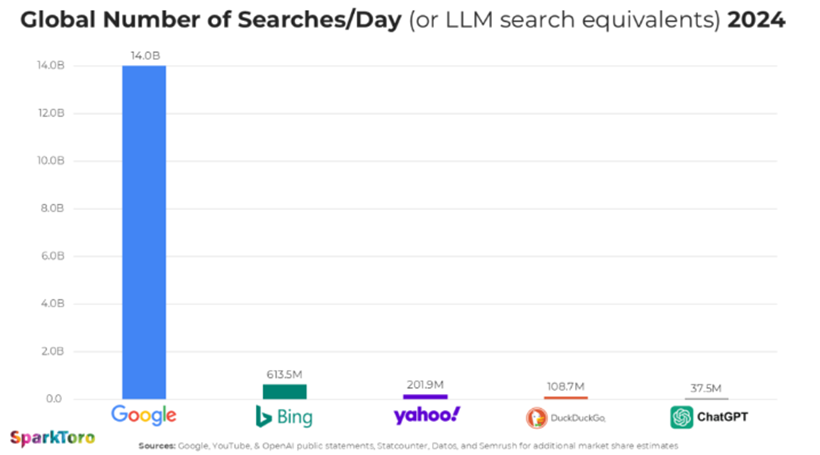

SparkToro looked at the number of searches per day across Search Engines and AI and Google owns that space. Even with Google still having the market share the world of search is changing and we as SEOs need to change with it. So, I won’t be blocking the AI bots as Google needs the competition.

I regularly hear that the older generation isn’t using AI, however last Saturday my partner’s mother said how much she loves Gemini and Google AI Overviews. So, we are heading towards a zero-click world and we truly are optimising for bots. So, it’s time to keep an eye on the bots.

^(Please note I’m a technical SEO, not an infrastructure legend, so let me know if there’s a clearer explanation.)

Leave a Reply